I was pondering non-deterministic, shareware nagging. Suppose that every time you performed a particular function, I wanted to randomly decide whether to nag you this time or not. Suppose further that I’d prefer not to have to keep track of how long it’s been since I last nagged you, but I’d still like you to be nagged about once every times. Naïvely, one would expect that if I just chose a random number uniformly from the range zero to one and nag if the number is less than

, this would do the trick. You would expect that if you rolled an

-sided die each time, that you’d roll a one about every

-th time.

Me, I’ve learned to never trust my naïve intuition when it comes to probability questions. Well, it turns out that the naïve answer is perfectly correct in this case. Here is the epic journey I took to get there though.

Here’s what I wanted. I wanted to know the probability needed so the expected number of trials until the first success is some number

given that each trial is independent and is successful

proportion of the time. Suppose for a moment that I decided I am going to roll a six-sided die until I get a one. What is the expected number of times that I need to roll that die to get my first one? What I want here is the inverse problem. How many sides should my die be if I want it to take

tries (on average) to get my first one?

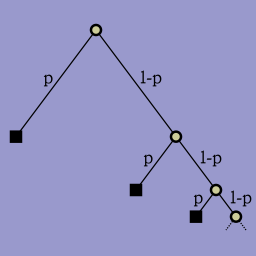

In the picture at the right, each circle represents a trial. Each square represents a success. What I am looking for is what value should I pick for

In the picture at the right, each circle represents a trial. Each square represents a success. What I am looking for is what value should I pick for so that if you go left

proportion of the time and go right the other

proportion of the time, you will expect to hit

circles along your descent. You could, if very unlucky (or lucky in this case since I’m going to nag you) go forever to the right and never get to a rectangle. So, already, we’re expecting an infinite sum.

Indeed, the expected number of circles you cross in a random descent of this tree is . Massaging this a little, we can get it into a simpler form:

.

That infinite series is pretty nasty looking though. So, we whip out a handy table of infinite series and see that:

Fortunately, in our case,

Now, we note that when

And, since

Whew! Big sigh. We’re through the ugly parts. Now, in our case, , and the equation we were interested in was

. Plugging in

for the

in our infinite series solution, we find that

Two pages of ugly, ugly algebra bears out the simple guess. Wheee! If we want , then we have to pick

. Hooray!

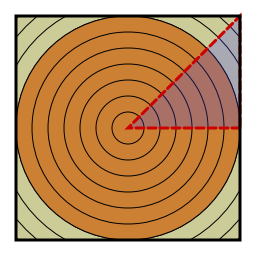

How about two dimensions? This is immediately more awkward. The one-dimensional disk looks exactly like the one-dimensional square. In two dimensions, the disk and square are quite different. We either have to integrate over the square and calculate the radius of each point, or we have to integrate over increasing radii and be careful once we get to the in-radius of the square.

How about two dimensions? This is immediately more awkward. The one-dimensional disk looks exactly like the one-dimensional square. In two dimensions, the disk and square are quite different. We either have to integrate over the square and calculate the radius of each point, or we have to integrate over increasing radii and be careful once we get to the in-radius of the square.