With most logic or word puzzles, your goal is to find a solution. To make those puzzles interesting, the puzzle maker has to assure that there is a solution and that the solution is unique. This is true for crossword puzzles, sudoku puzzles, cryptograms, rebuses (rebi?), mazes, Lights Out games, and binary logic puzzles of this form: Alice is wearing red. John is older than Jimmy. The tallest person is not wearing green. Clue. Clue. Clue. Who is standing next to Alice?

Rush Hour games and tanagrams are also usually crafted so that there is a unique solution.

Uniqueness

For some of these games, we mean a unique solution amongst all solutions without backtracking. For example, in solving a maze, we would not consider solutions that involve running down a corridor only to come back along the same corridor. For Lights Out games, the concept is simple but describing it takes work. Imagine that every square on the board, in addition to being lit or not, also has a letter ‘A’ on it at the start of the game. Whenever you press a button labelled ‘A’, it gets relabeled ‘B’. Every time you press a button labelled ‘B’, it gets relabeled ‘A’. Two solutions are identical if the board has the same configuration of ‘A’s and ‘B’s after both moves. Thus, hitting any button more than once was just a waste of time. However, the order that you hit the buttons makes no difference at all.

There is a notable crossword puzzle with two solutions. There are some notorious Sudoku puzzles with two solutions. I don’t know of any cryptograms with two solutions, but I am going to go asearchin’ some time.

Technically, a Sudoku puzzle with two solutions cannot be solved. You had to make a guess somewhere and it turned out you had twice the odds of being right that you should have. It is easy to accidentally take too many numbers out of the Sudoku grid and end up with an ambiguous puzzle. For a crossword puzzle, I doubt most people bother to check to see if the solution is unique. It probably is unique by sheer combinatorics. The same may be true cryptograms, as well. You’d have to specifically be trying to make them ambiguous to achieve it.

Trapdoors

Where are the trapdoors? Well, the puzzle creator has a much harder job than the puzzle solver for most games. It is easy to construct mazes and Lights Out games with unique solutions (in fact, it’s easy to show that if a Lights Out game is solvable, the solution is unique). It is not so easy to construct other puzzles that have provably unique solutions.

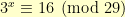

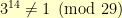

This brings us to the Discrete Logarithm Problem. Imagine that I told you that  and asked you what

and asked you what  is modulo 28. There is no really easy way to solve this problem, yet it is easy to formulate such problems. I checked that

is modulo 28. There is no really easy way to solve this problem, yet it is easy to formulate such problems. I checked that  . Then, I picked

. Then, I picked  and plugged it in to see that

and plugged it in to see that  . I started from the answer and was quickly able to generate a problem that I can guarantee has a unique solution.

. I started from the answer and was quickly able to generate a problem that I can guarantee has a unique solution.

This is called a trapdoor function. It is easy to calculate, but hard to invert the calculation. It’s also not a very fun problem to do. You will never open up the comics page of your newspaper and find five of these problems below the bridge column and above the horoscopes.

I can easily imagine a whole variety of Lights Out type games where lights have more than one state. It would be easy to prove, for a wide class of such games, that when a solution exists, it is unique. These would all be solvable by inverting a matrix whose coefficients come from a finite field.

What other puzzles could take advantage of trapdoor functions to make the game easier on the puzzle maker than on the puzzle solver?

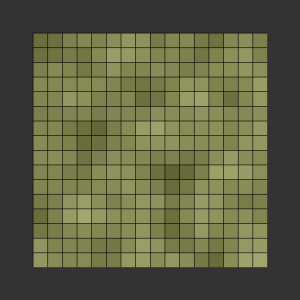

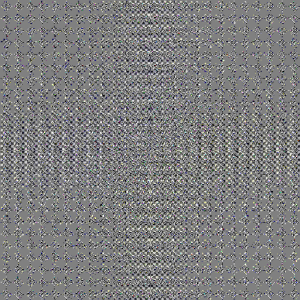

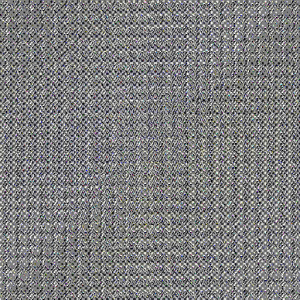

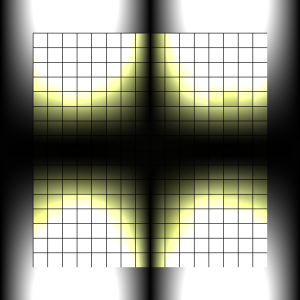

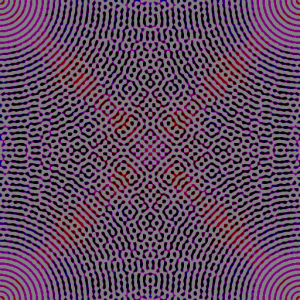

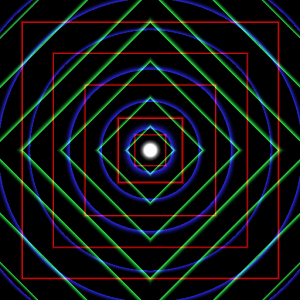

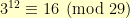

Here is one of the nifty designs that I generated with his program. I encourage you to download it and play around a little bit. It is really great to see in real-time how activating certain frequencies affects the output.

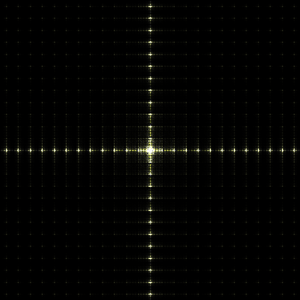

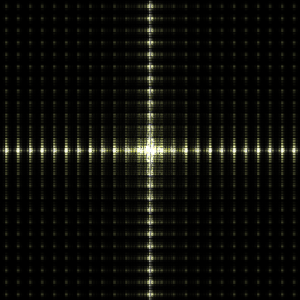

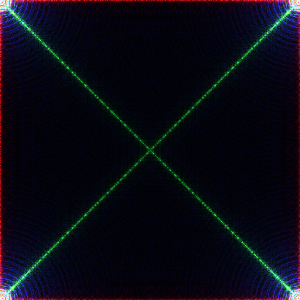

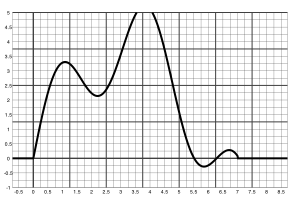

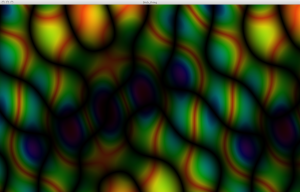

Here is one of the nifty designs that I generated with his program. I encourage you to download it and play around a little bit. It is really great to see in real-time how activating certain frequencies affects the output. here are the frequencies that I had activated for the above picture.

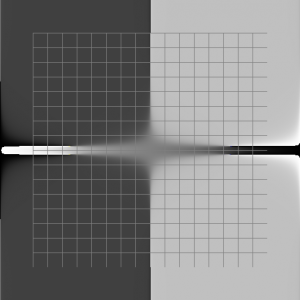

here are the frequencies that I had activated for the above picture.