I live each day with the fear of a catastrophic disk crash.

Most of the data I care about lives on my MacBook Pro. My PowerBook had some disk drive issues at one point. Because it had been a month since I had backed it up, and that backup was partially scrogged, I lost about six weeks of work when that drive flipped out.

I mitigate this slightly by mirroring my VCS repositories to several different machines. I also download digital images to two different computers before deleting them from the camera. I still suffer through Retrospect backing up to DVD-R every few weeks.

I recorded the presentations from the last TC Lispers meeting. I expect to do the same at this month’s meeting. Because the raw material from last month was 48GB and because I only had about 45GB of space remaining on my drive, I needed to get that 48GB out of the way by next week. So, I was caught in a crisis.

It’s not data that I want to pay $0.15/GB/month to store. It’s not data that I just want to throw away. At the same time, I am probably not going to need that raw data again for at least a year.

After struggling through the first six disks (which took me eleven disks) trying to backup the data to DVD-R with Retrospect and having only backed up about a quarter of my data at this point, I abandoned trying to write use Retrospect to archive the data. So, now what?

Problems I Would Like to Solve

- Day-to-day Peace-of-mind: If my drive crashes, I haven’t lost too much time or irreproducible data

- Archival: There is some data I don’t want to throw away, but don’t need spinning next to me

- Fire-safety: If a small nuclear device explodes in my computer corner, I haven’t lost too much time or irreproducible data

My solutions

I have added two 1TB disks to my Linux box. I have set these up as a RAID-1 (technically, as a RAID-5 with one faulty-by-way-of-being-absent drive). I have set this filesystem up to be shared via Appletalk, and I have set up Time Machine on my Mac to backup onto that filesystem. Turning on Time Machine was a significant pain. The how-to page is wonderful. However, File Vault and Time Machine don’t play nicely together. Turning off File Vault was terribly annoying. I will tell you more about that below.

So, this takes care of my Day-to-day Peace-of-mind. It has also given me a stop-gap on the Archival. I have a fair bit of space at the moment to drop 48GB. It’s spinning, but it isn’t costing me $7/month either.

I still have Fire-Safety to deal with. I have a 250GB external drive. I will probably use SuperDuper! or one of its cohorts to back up to that external drive. Then, I can lock the drive in the firebox or take it offsite. I will have to find some way to get backups from my Linux box onto the drive, too, but that seems fairly tractable.

For longer term Archival, I am going to give ArchiveMac a shot.

If I find a good archival solution, then I may go with one of the online backup services for my Fire-Safety and/or Day-to-Day Peace-of-Mind.

If any of y’all have a good Archival or Fire-Safety strategy, I’d love to hear it.

The Problems that I’ve had with Retrospect

Note: I am using Retrospect 6 even though they are currently on 8. I do not like 6 so I can’t imagine paying them more money.

I bought six because it was the only software I could find at the time that allowed me to do full and incremental backups and backup to DVD. Actually, it turns out that it would only let me have full flexibility on the full/incremental stuff if I backed up to big media. To do things to DVD, I couldn’t branch arbitrarily and do an incremental based on a chosen previous backup in the same heritage.

Even worse, Retrospect is very picky about both the drive and the media. I made many coasters trying to get backups working at all. Even when they were working, about three in eight disks were coasters before it even finished writing them. Of those it finished writing, about one in twelve were coasters-in-hiding.

Combining the coasters-in-hiding

problem with the unable to pick the parent for my backup

meant that if I didn’t do the backup with verification turned on, I couldn’t come back later and re-do the backup. Retrospect would consider that backup complete. If it wasn’t, then I had to go through and touch any files that I wanted to make sure got backed up on my next attempt.

The Problem with Time Machine and File Vault

I didn’t turn on Time Machine when I first upgraded to Leopard. I didn’t do this because Time Machine has no subtlety. It will keep that 48GB of data I may never need spinning and spinning. When Time Machine runs out of space, it starts getting rid of the oldest stuff. As such, until I run out of space, I’ve got this 48GB I may never need. Once it runs out of space, I don’t have old versions of things that have changed recently. It’s a nice Day-to-Day Peace-of-Mind, but it is not an Archival solution.

Early this year sometime, I turned on File Vault. There shouldn’t be anything particularly sensitive on my drive outside of my Keychain. On the other hand, there’s no reason someone stealing my laptop should get to have any of it. It seemed like an easy win.

Then, I went to turn on Time Machine yesterday. Time Machine warned me that my home directory was encrypted. As such, it could not backup my home directory as individual files. It could only back up my home directory as a whole. And, it could only do this when I am not logged in. When I am at home (which would be the only time I have access to the external drives that I’d be using Time Machine on anyhow), I am always logged in. I don’t want to have to restore my whole home directory somewhere to get back a file I shouldn’t have deleted. I would much rather it backs things up only when I am logged in. How often do my files change when I am not changing them?

Fine, I’ll turn off File Vault. That wasn’t so easy. File Vault wanted me to free up 128GB of space on my home volume so it could decrypt itself. I only had about 70GB of space available.

I had to back some stuff up so I could delete it so I could make enough room to turn off File Vault so I could turn on backup software! It’s brilliant, brilliant, brilliant!

I moved the 48GB of raw presentations over to the RAID on the Linux box. This took about 45-minutes. Then, I moved my iTunes Music and my VMWare disk images out of my home directory. This took about an hour.

Unfortunately, since File Vault was keeping my home directory as a Sparse Bundle on my main drive, moving things out of the Sparse Bundle and onto the main drive didn’t free up any space inside the Sparse Bundle. Thinking it would be faster to move stuff to a locally connected FireWire drive than over the network to the RAID, I deleted the 200GB of Retrospect backup catalogs that I had on the external drive and moved my iTunes Music and VMWare disk images over there instead. This took another hour.

Finally, I got to turn off File Vault. I didn’t wait around for it to figure out how long it thought it would take. It took more than half an hour and less than an hour-and-a-half for it to finish that.

Then, I moved my iTunes Music and VMWare disk images back from the external drive (another 45-minutes or more).

Then, finally, I turned on Time Machine.

I spent more time turning off File Vault and turning on Time Machine than I did buying disks, creating the RAID, setting up Appletalk under Linux, and plopping the 48GB of raw presentations across a network onto the RAID.

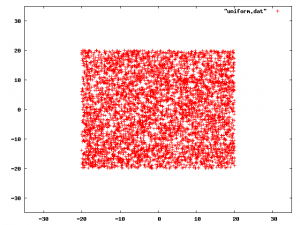

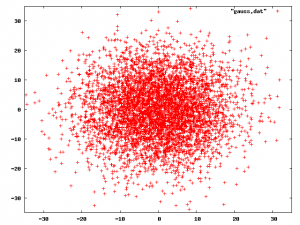

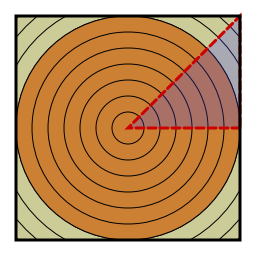

How about two dimensions? This is immediately more awkward. The one-dimensional disk looks exactly like the one-dimensional square. In two dimensions, the disk and square are quite different. We either have to integrate over the square and calculate the radius of each point, or we have to integrate over increasing radii and be careful once we get to the in-radius of the square.

How about two dimensions? This is immediately more awkward. The one-dimensional disk looks exactly like the one-dimensional square. In two dimensions, the disk and square are quite different. We either have to integrate over the square and calculate the radius of each point, or we have to integrate over increasing radii and be careful once we get to the in-radius of the square.